Synchronicity is a term introduced by Carl Jung in 1950 to describe the simultaneity of two events connected in an a-causal way. Coincidence of two or more a temporal events, so not synchronous, linked by a similar ratio of meaningful content. Jung distinguishes synchronicity from the "timing" events that occur simultaneously, that is at the same time : dancers who do simultaneously the same step at the same rate, two clocks that mark the same time, metronome and music that follow the same rhythm, etc. ... which are events that occur without any connection with meaning, both causal and random, because they are actions pure contemporary actions. Instead synchronicity is based on other assumptions that, in everyday life, are translated as: to think of a person and shortly after receive a phone call brings news about; appointing a number and going to see a car with the same numberplate, read a sentence that strikes us and shortly after heard repeated by another person, etc.., which sometimes give distinct impression of being precognitive events related to a kind of inner clairvoyance, as if these signals were scattered artfully on our daily journey to "communicate something about only ourselves and our inner conversation." A kind of external response, positive or negative, objectively impersonal and symbolically represented.In analogy to the causality that acts in the direction of the progression of time and links two phenomena that occur in the same space at different times, it is assumed the existence of a principle that connects two phenomena occurring at the same time but in different spaces . Practically it is assumed that in addition to the next logical development of a measure consistent with the principle in which events occur at different times caused by a cause, there exists another in which events occur at the same time but in two different spaces because, being random, are not directly caused by an effect, that correspond perfectly to the principle of a-temporality.

In physics, the particles are usually treated as a wave function that evolves according to the Schrödinger equation. In particular, the superposition principle plays a fundamental role in the explanation of all the interference phenomena observed. However, this behavior is in contrast to classical mechanics: at a macroscopic level, in fact, it is not possible to observe a superposition of distinct states.

A well known example is provided by the paradox of Schrödinger's cat: a cat (like any living being) can not be both alive and dead. Then a question arises: is there a separation between quantum and classical regime? The Copenhagen interpretation suggests an affirmative answer: make a measurement of a quantum system is equivalent to make it observable, then "classic." For example, if in the double slit experiment we observe the trajectory of a particle, the interference is destroyed (principle of complementarity). The mechanism responsible for this phenomenon is called collapse of the wave function and was introduced by Von Neumann. However, if there is a boundary between the quantum and classical is not clear where the track goes - or why it exists: the collapse of the wave function is only postulated. These problems are addressed by the theory of decoherence, whose basic idea is the following: the laws of quantum mechanics, starting out from the Schrödinger equation, that apply – in principle - to isolated systems – apply even at the macroscopic ones. When a quantum system is not isolated from the outside - such as when a measure - it becomes entangled with the environment (which is also treated quantum); this fact, the theory goes, has crucial consequences on the maintenance of coherence.In particular, if the system is prepared in a coherent superposition of states, the entanglement with the environment leads to loss of coherence between the different parts of the wave function that correspond to the states overlapped. After a characteristic time of decoherence, the system is no longer in a superposition of states, but in a statistical mixture.According to the theory, the difference between microscopic and macroscopic systems is that if the first may insulate from the outside (that is that coherence is easily maintained for a sufficient "long" time), the same can not be said for the second , but for which you must inevitably take into account the interaction with the environment. Consequently it is virtually impossible to observe macroscopically distinct states overlap, because even if you could prepare (thing in itself difficult, if not forbidden by the theory) would have a life too short.

Returning then to neutrinos, causality and speed of light the problem lies in the interpretation of the concept of time.One of the most 'biggest mistakes of theoretical physics, in fact, was to consider the time a physical "real" and not merely the perception/measurement of changes of state of matter by man. This' mistakenly led to believe that the time could be "scrambled" (contract or dilated) as if it were a piece of matter. And if is difficult to imagine being able to contract or dilate a length and, therefore, a distance between material points (which, however, exist only in mathematical or geometric theory of, but not in physical reality), without this succession of "no points" is in turn matter, time such not so ... It is just an abstraction, a mental concept, however subjective or, to put it to Einstein, relative. The entire structure of space-time is a simple mathematical abstraction and not a physical reality. What really exists is only matter where the link cause and effect of events is preserved by its continuous and a temporal nature. Experiments "entanglement" performed by Alain Aspect in the 80’ accredit strongly this hypothesis. In this frame speed is nothing else that variation of matter state function in a normal euclideus material and a temporal space.

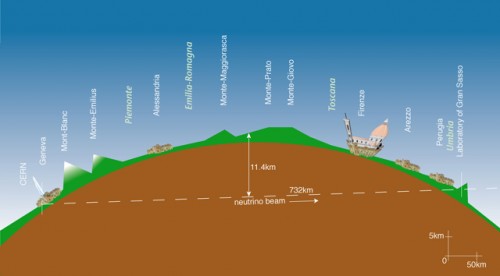

So what really happened between CERN and Gran Sasso? Neutrinos could have only traveled a short cut, that is a circular segment behind the arc of the curve by the theoretic photon path. Let’s consider the chord 730 Km long, behind the arc of the circular segment which separates CERN and Gran Sasso laboratories. Since approximately R = 6,378 km radius of the Earth, with simple trigonometric considerations the central angle theta in radians is 0.114. The advance (presumed) of neutrinos with respect to the photons is approximately delta t = 60 ns = 60 10 ^ -9 sec, which results in a "light" photo finish of delta s = 300,000,000 m / sec x delta t = 18 meters. Admitting that neutrinos can catch but not exceed light speed, 18 meters would be the shortening of the chord. The angle of relativistic gravitational deflection of light, in radians, can be assumed : phi = 4*(GM/c^2)/R = 4*6.67e-11*5.97e24 /(6.37e6 * 9.00e16) = 2.78e-9 rad. To obtain the deviation in meters on arrival point just propagate this deflection linearly on the distance of 732 km, obtaining: 2e-9 * 1E6 = 2e-3 m = 2 mm approximately. Propagating the deflection along 18 meters only, the scart from natural geodesic would be approximately 5 e-5 mm. It would, then, to test the hypothesis of trajectories between two points in a curved spacetime shorter then a geodesic, exploiting the high penetration of neutrinos in matter. To do this' the banker doesen't shoot them with "get up zero", but with a negative slope properly calculated. "On paper" the negative slope that neutrinos beam must have to walk the chord of circular segment, should be about 3.26 degrees (half the angle at the center), with respect to the plane tangent to the earthly bending. This means that, if we would have an "ideal" tunnel under earthly surface, its pendence would be approximately 5,7 % respect on the plane tangent to eartly bending at the access. If neutrino "pulled right", the trajectory is just the rope that, for those who would "dig" it, would be a saddle (we would have a "down" and a "lift" to emerge on the surface, as we should reckon with gravity), but from purely geometrical (and Euclidean) point of view the path would be just a line segment with a scart of a couple of millemeters from "natural" geodesic ; in every way neutrinos would do something that is not predicted by theory. also without exceeding light speed.This also means that for very long accelerating you can think of digging a tunnel that "cuts" the road traveled by the e.m. radiation. In practice to cross an euclidean "phisical" three-dimensional space under light geodetic trajectories impressed by gravity - that wouldn't be the smallest distance lines between points no more and, perhaps, reach for the stars more rapidly than you could think up to now .

Stefano Gusman

Nessun commento:

Posta un commento